Research

“Were you talking to me?”: Quantifying Perception of Speaker Directionality in Co-Located Extended Reality Environments

This thesis investigates the absolute aural threshold, or critical angle of directivity, at which listeners in digital extended-reality environments perceive a human talker as facing “towards” or “away” from them. While previous research indicates utilizing spatial audio reproduction in telephony benefits user experience, extant technical challenges such as conversational clarity and delay often induce fatigue or senses of exclusion. Interlocutors tend to rely consciously on the visual cue of rotating their head to convey focus or intent when physically co-located, while listeners encounter human vocal timbre as changing qualitatively depending on a speaker’s relative physical orientation. As part of investigating the variables underpinning perceptual qualifications of directivity, this study therefore explores conceptually how offering relative vocal directionality in telecommunications might refine intelligibility and/or enhance telepresence. Initial experimentation supports the preliminary conclusion that there exists a critical angle of directivity of ±30°, beyond which increasing speaker rotations result in decreasing metrics of user surety. Additional qualitative analysis of subject responses revealed a high degree of intermodal dependency in aural and visual cues, suggesting multiple impact points for future investigations and current communication interfaces in the wake of rapid digitalization owing to COVID-19.

Master’s Thesis — Available privately upon request.

[In progress] evaluating the similarity of recorded speech with respect to off-axis spectral change

As explained in the section immediately below this, to prep my thesis stimuli I need to be able to adequately compare the microphone response, which necessitates selecting the most similar takes. As the response will be largely colored and affected by the frequency content, it’s necessary that these metrics are relatively invariant to pure spectra measurement.

Still very WIP, but the tentative approach is constructing a feature vector that is some parts time analysis (onset detection, Dynamic Time Warping), and some parts pitch/contour analysis.

[In Progress] comparing off-axis response of the earthworks m30 and neumann ku-100

As part of prepping stimuli for my thesis experiment, I needed to quantify that the Earthworks M30 would display a similar behavior pattern to the Neumann KU-100 when presented with an off-axis speaker. The known reduction in high-frequency content towards the sides & back of a speaker makes spectral centroid a good candidate for a comparative metric. However, as presented in fig. 1, the recorded speech samples of the initial test still contained too much variation in time to provide meaningful data.

As a temporary way to test later methods of comparison, I averaged the spectral centroid and manually selected the most “similar” section of the takes, with the hope of displaying slightly more cohesive data. (Fig. 2)

Fig. 1 - Spectral centroid of the first take at each rotation

Fig 2. - Spectral centroid of the average from each position

Fig. 3 - Average of average spectral centroid

The results of Fig. 2 still were unclear and difficult to interpret. I averaged the spectral centroid across the full time of the segment, yielding a single value for each speaker rotation. (Fig. 3)

Putting it on a polar plot helped make the data more interpretable, but again we still have the initial issue with potentially too much variability in the data, to begin with.

This necessitates coming up with a method to quantify the similarity between takes (to avoid the Fig. 1 issue). I’m going to make that its own project and will update this when progress has been made there.

[In Progress] Comparing Latency of NYU’s Corelink framework to existing AoIP solutions

As part of ensuring the viability of the Corelink framework as a real-time audio transport protocol, measurements in various configurations are being taken against existing solutions such as Dante, JackTrip, and Zoom.

Evaluating Change in Spectral Magnitude Precision of a Binaural Chorus Effect

[In Progress]

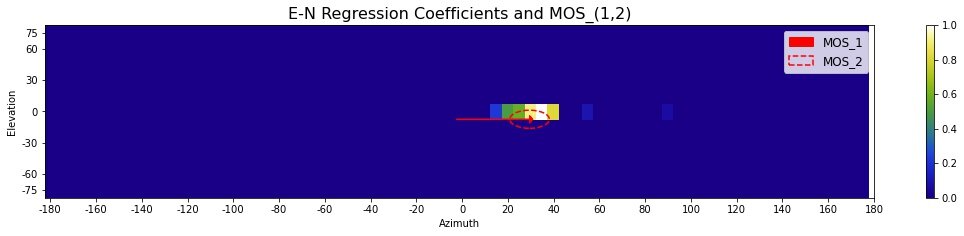

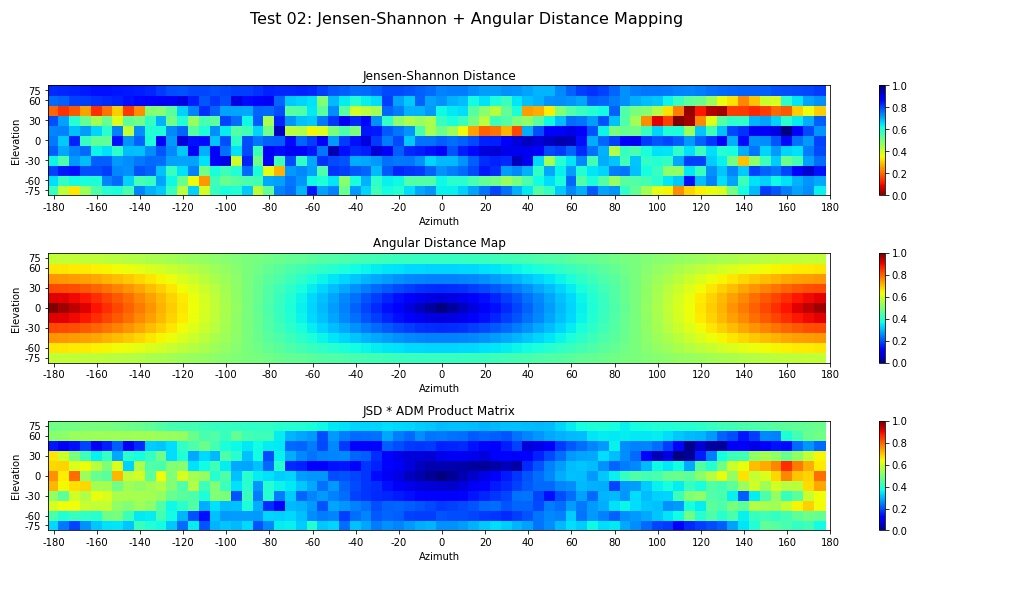

Recreation and application of techniques presented by Crawford, Audfray, & Jot’s “Quantifying HRTF Spectral Magnitude Precision in Spatial Computing Applications” AES E-Library link

Used to examine the spectral effects of a Binaural Chorus developed by Sam Platt AES E-Library link

Presentation: Live Binauralization of Theatre Performance

Presented at an internal tech symposium at the University of Michigan Duderstadt Center.

Utilizing Reaper + TouchDesigner, this program is designed to spatialize an actors mic feed based on their location on stage, producing a binaural output so the audience can experience the actor’s movements in 3D space.

The Study running in the Unity Editor

REsearch Study:

Utilization of pitch as an element of binaural elevation localisation

Abstract:

Due to the computationally expensive nature of binaural audio representation and the necessity for custom-tailored HRTFs, readily available methods for 3D audio are still largely inaccessible and fall short of providing an adequate experience, particularly in regards to sound elevation. This study tests if pitch can be introduced as an element of a sound source’s elevation, increasing listener’s accuracy with locating the sound source.

Participants identified a series of binaurally-processed stimuli coming from a digital speaker array to test their accuracy across multiple trials. The data collected in this study did not support the hypothesis of a pitch element increasing accuracy, rather diminishing listeners’ ability to localize the sound source. Further, and more varied, study is required to form a conclusion on the utilization of pitch as a localization element.

Paper available upon request

‘Pretty Hurts ‘ 360 Video/Ambisonic Recording

Featuring University of Michigan's The Sopranos performing "Pretty Hurts", originally performed by Beyoncé

As the first 360 recording session done at the University of Michigan, this recording was done in order to experiment with and establish workflow and best practices for recording spatial music. From this I discovered that traditional practices such as room mics and X-Y pairs are not suited well for spatialization, but proved that the combination of a center Ambisonic microphone supplemented by other microphones at the point of the sound source (Solo Vox + Vocal Perc here) spatializes properly while allowing for greater mix control. Next steps include sessions with each sound source individually mic'ed, and experimenting with creative spatialization of additional elements.

Paper:

Utilizing Psychoacoustic Principles to Inform Artistic and Technical Decisions in Audio for Virtual Reality

This paper looks through a history of pyschoacoustic studies, the fundamentals of acoustic perception, and an overview of the mathematical concepts of Ambisonics, and combines them to examine the way we perceive sound in a 3D environment and discuss best practices in utilizing these principles.

Paper available upon request